Image-reading accuracy Increases with deep-learning DR algorithms

A deep-learning algorithm increased the accuracy of reading images for detecting retinal pathologies in patients with diabetes.

With the recent surge in

“The push behind this has been to increase access to care for patients internationally in underserved areas that have limited resources and specialists,” said

That reach has also extended within the United States where patients with diabetes have relatively suboptimal adherence to screening guidelines for retinopathy, said Dr. Rahimy, vitreoretinal surgeon,

The first publication from the

These findings, and those of other related studies, are exciting but at the same time have opened a Pandora’s box of issues that need to be addressed, he noted.

The first of which is how retina specialists and society will begin to trust machine learning-i.e., how these algorithms arrive at their decisions or predictions is not currently transparent to the operator. In a future in which humans will co-exist with machines, some degree of human oversight will still be required and the manner in which that will take place remains to be determined.

Dr. Rahimy and colleagues conducted a study to understand the impact of deep-learning DR algorithms on physician-readers in computer-assisted settings.

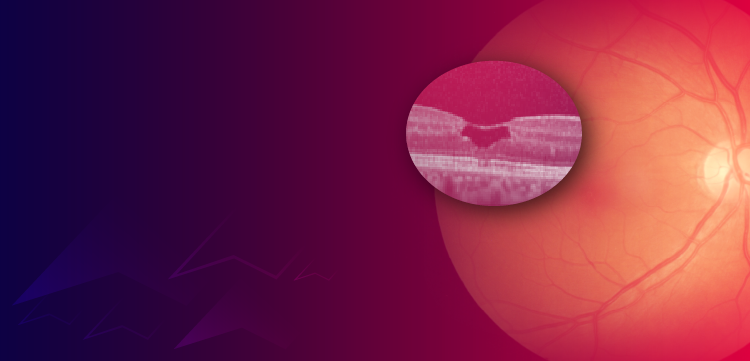

In this study, the computer both scored the histograms and provided weighted averages that the algorithm favored in the prediction of a diagnosis based on an image and provided heatmaps with areas of highlighting that most contribute to the prediction, he noted.

The study, which included 10 physicians who read 1,796 images, had three scenarios in which the readers were:

- unassisted in reading the images and determining a diagnosis;

- assisted by the grades provided and favored by the algorithm, or

- assisted by both the grades and the heatmaps provided by the algorithm, Dr. Rahimy explained.

The images, which were provided by

Study results

The study demonstrated the beneficial effects of computer-assisted reading of images.

“Overall, we saw that computer assistance in this study actually help improve the five-class diagnostic accuracy,” Dr. Rahimy said. “This improvement was really driven by cases in which there was pathology.

“When DR was present, we saw a substantial improvement by having graded assistance as well as the graders and the heatmaps together,” Dr. Rahimy added.

A noteworthy study finding was that improvement depended on the background of the reader.

Results showed that when general ophthalmologists read the images unassisted, the level of accuracy was not as high as the level of the algorithm. When general ophthalmologists were assisted by grades provided by the algorithm and by both the grades and heatmaps, their accuracy levels improved to the level of the algorithm, he pointed out.

When performances of the retina specialists were evaluated, they were seen to have met the level of the algorithm, but in the scenarios when grades and the grades plus heatmaps were added, the retina specialists exceeded the accuracy level of the algorithm.

The sensitivity increased as a result of the computer assistance.

Dr. Rahimy reported that for moderate nonproliferative DR, when readers were unassisted the mean sensitivity was 79.4%. With grades only, the sensitivity rose to 87.5%, and with grades and heatmaps, it increased further to 88.7%.

The increase in sensitivity did not occur with a drop in the specificity. For the same pathology, the respective percentages of specificity were 96.6%, 96.1%, and 95.5%.

When the graders were questioned about their confidence in computer-assisted reading, the readers reported increasing levels of confidence as they moved from the unassisted scenario to the grading assistance to grading and heatmap assistance.

Another finding was that the time in seconds required to reach a diagnosis increased with the added levels of assistance regardless of whether DR was present. The readers were not informed before the study that the time to diagnosis was measured.

“Over the course of the experiment, the time spent on the task decreased across all conditions, suggesting that there was a learning curve,” Dr. Rahimy said.

“Assistance with a deep-learning algorithm can improve the accuracy of and the confidence in the diagnosis of DR and prevent underdiagnosis by improving sensitivity with little to no loss of specificity,” he concluded. “The effects depend on the background of the reader. In some cases, doctor plus assistance is greater than either alone.”

Disclosures:

Ehsan Rahimy, MD

E: erahimy@gmail.com

Dr. Rahimy is a physician consultant to Google.

Newsletter

Keep your retina practice on the forefront—subscribe for expert analysis and emerging trends in retinal disease management.