Applying AI in fundus images

FDA decision changes scope of healthcare delivery; increases patient access to early detection of DR

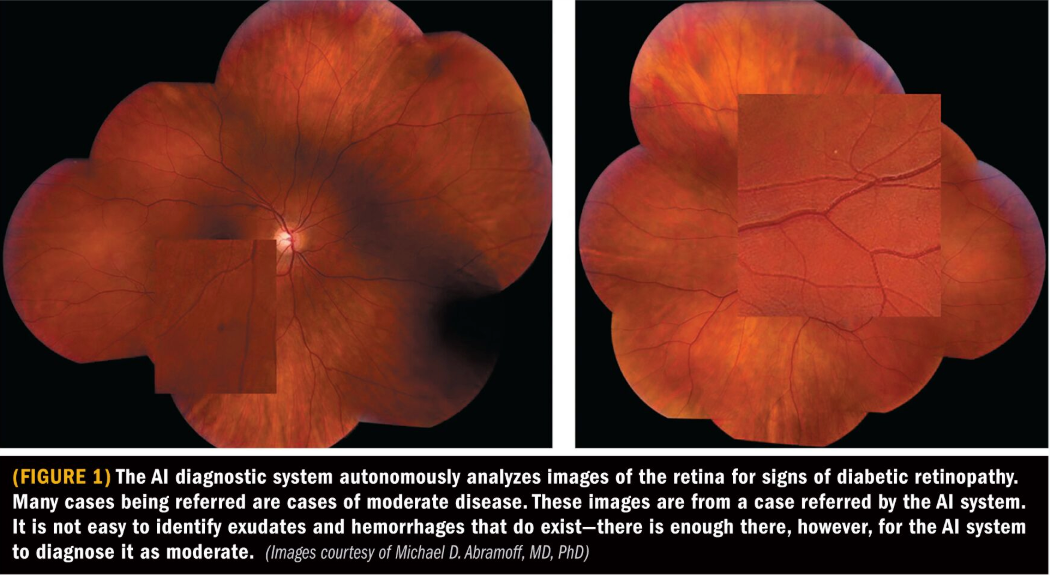

The approval last year for the first autonomous artificial intelligence (AI) to make a diagnosis without a physician has opened new doors for the first medical device to use AI to diagnose moderate or worse diabetic retinopathy or macular edema in adults who have diabetes.

Reviewed by Michael D. Abramoff, MD, PhD

A decision by the FDA in April 2018 changed the game for identifying patients at risk of vision loss. Its decision authorized the marketing of an AI system (IDx-DR), that enables the automated detection of diabetic retinopathy in primary care, and marked the first time the agency has granted clearance for an autonomous AI diagnostic system that does not require a physician to interpret results.

This advancement will lead to changes in healthcare delivery by increasing patient access to early detection of diabetic retinopathy, noted Michael D. Abramoff, MD, PhD, the Robert C. Watzke, MD Professor in Retina Research, Department of Ophthalmology and Visual Sciences, University of Iowa Carver College of Medicine, Iowa City. The autonomous AI diagnostic system makes a diagnosis by itself for DR. It requires no human oversight, and, importantly, it aligns with clinical standards.

The system has been designed and tested for use in a primary-care setting, where it can provide a point-of-care diagnosis in a few minutes. The system includes a robotic camera and therefore requires operator training, but training is minimal. Existing staff may be trained in a few hours, even if they have not done retinal imaging.

The algorithm used is based on how clinicians look at DR, meaning it uses machine learning for the detectors that detect the exudates, hemorrhages, micro-aneurysms, and other lesions that indicate DR, noted Dr. Abramoff, who is also founder and chief executive officer of IDx.

The outputs of these detectors are combined leading to two categories at the patient level:

1. No or mild DR, which can be re-examined in 12 months, according to the American Academy of Ophthalmology Preferred Practice Pattern (AAO PPP).

2. More than mild DR and/or ME, which needs to be examined by an eye-care provider and may need treatment, according to the AAO PPP.

Practice patterns

The system output aligns very closely with the preferred Clinical Practice Patterns from the AAO. The “no or mild DR” results require no more than review at 12 months. All other stages, including both center-involved and clinically significant DME as well as all more severe stages of DR, require closer follow up, and in some cases treatment.

ETDRS

The most commonly used standard for deciding the severity of DR is the Early Treatment Diabetic Retinopathy Study (ETDRS) severity scale. In the pivotal trial, the autonomous AI system was compared with this standard.

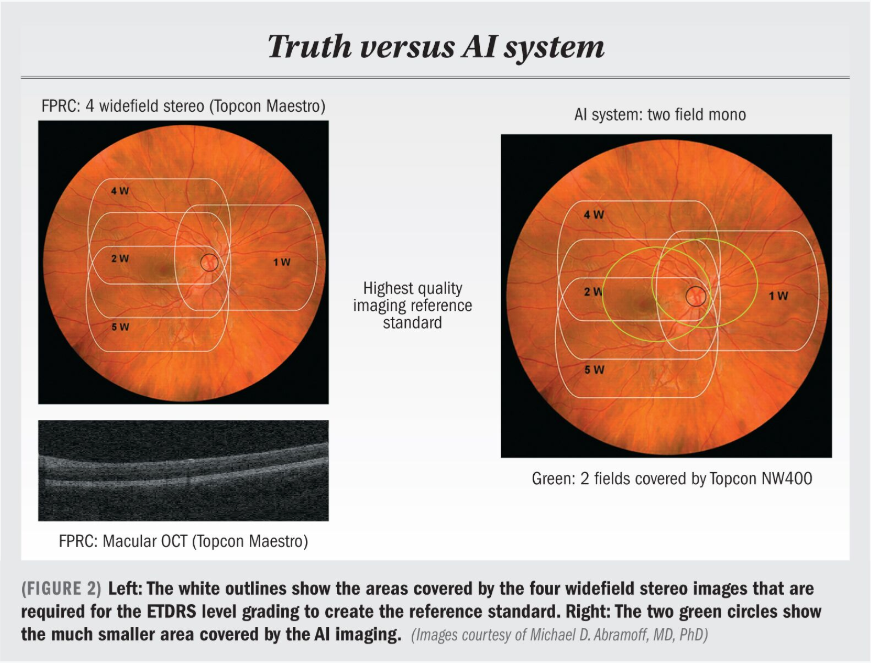

In Figure 2, the white outlines in the image on the left show the areas covered by the four widefield stereo images that are required for the ETDRS level grading to create the reference standard. Macular optical coherence tomography (OCT), shown in the lower left, was also obtained to determine center-involved DME for the reference standard.

In the image on the right, the two green circles show the much smaller area covered by the AI imaging. While these two non-stereo images are more patient-friendlier to obtain, compared with eight flash widefield images required for ETDRS, the area covered is smaller, and so any hemorrhages or other lesions outside the green areas will be missed by the AI system, but will still contribute to the reference standard used to determine whether or not the AI system was correct.

Clinical trial

A study was conducted on 900 subjects with diabetes from primary care clinics around the United States, many of which did not have an ophthalmic clinic within close distance.1

The AI system was operated by minimally trained operators who had to confirm they had never imaged the retina before the start of the study, whereas the aforementioned ETDRS reference standard was obtained by highly experienced, certified retinal photographers, and then the ETDRS reference standard was compared to the output of the autonomous AI system.

The results showed that for the AI system, the sensitivity, meaning the ability to capture the level of moderate or more DR and/or ME was 87%, meaning 87% of cases were caught, and the specificity, meaning the ability to correctly identify those without disease, was 90.7%.

Board-certified ophthalmologists, compared to this same ETDRS reference standard-but without OCT-have shown sensitivities of 34%, 33%, and 73% in the only available studies compared with full ETDRS.

The reason for this is likely that while ophthalmologists are highly experienced in calling out no and severe DR, it is much harder to differentiate precisely between mild and moderate DR-which can depend on the presence of a single hemorrhage.

Another important item is imageability, which is the capacity of the AI system to be able to make a clinical decision, rather than report that there is insufficient quality to make a diagnosis. This happened in only 4% of cases, so in 96% of cases in the study the AI system was able to make a clinical diagnosis.

Disclosures:

Michael D. Abramoff, MD, PhD

E: michael-abramoff@uiowa.edu

This article was adapted from Dr. Abramoff’s presentation during a retinal imaging symposium co-sponsored by the Macula Society at the 2018 meeting of the American Academy of Ophthalmology. He is an inventor on patents and patent applications assigned to the University of Iowa. He is founder and CEO of IDx Technologies Inc., and is also a director and shareholder in this company. Dr. Abramoff receives financial support from Alimera Sciences.

References:

1. Abramoff, MD, et al. (2018). “Pivotal trial of an autonomous AI-based diagnostic system for detection of diabetic retinopathy in primary care offices.” npj Digital Medicine. 1(1): 39. https://www. nature.com/articles/s41746-018-0040-6. AI ANALYSIS

Newsletter

Keep your retina practice on the forefront—subscribe for expert analysis and emerging trends in retinal disease management.