Investigating the quality of real-world evidence in retinal disease

Real-world evidence is growing in importance as a source of information that can help support clinical decision-making when evaluated properly.

This article was originally published in Ophthalmology Times Europe.

It is accepted that randomly assigned controlled trials (RCTs) are the gold standard in establishing the efficacy of a clinical intervention. However, because such studies often have strict inclusion criteria and stringent requirements for patient follow-up, they often have greater patient adherence than that seen in the clinic and can exclude some of the types of patients routinely seen in day-to-day practice.1,2

In addition, RCTs can be extremely expensive to conduct, and study enrollment can be relatively slow compared with nonrandomized studies.3 This is where data collected from routine clinical practice comes in. Such data enable the generation of real-world evidence (RWE), which can complement information from RCTs by providing insights regarding the safety and effectiveness of an intervention in broader patient populations under routine care conditions.1

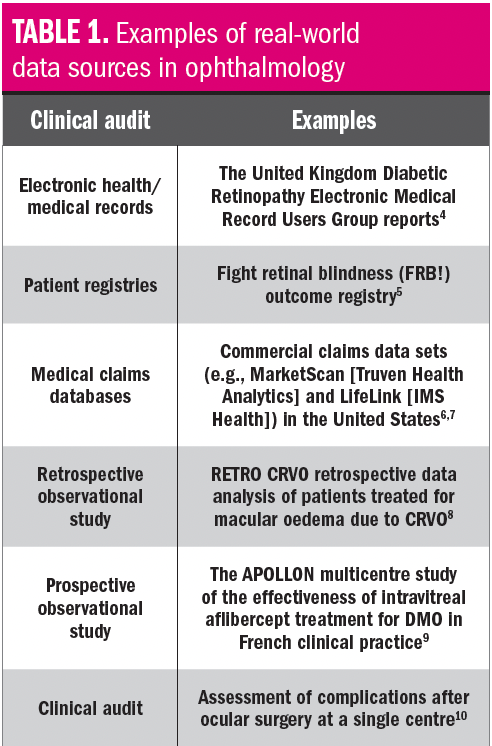

Abbreviations: CRVO, central retinal vein occlusion; DMO, diabetic macular oedema.

This evidence can be derived from real-world data from various sources, including electronic health or medical records, patient registries, medical claims databases, retrospective or prospective observational studies, or clinical audits (Table 1).1,4-10 RWE is increasingly being used to support drug approvals11 and in health technology assessments to support reimbursement.12

In that respect, it is already guiding clinical practice. With a better understanding as to how to assess the quality of real-world studies, clinicians can use this evidence appropriately to inform their own clinical decision-making at both practice and patient level.

In ophthalmology, there is an increasing global evidence base that describes the use of intravitreal anti-VEGF in routine clinical practice.5,6,9,10 Such studies have shown that, in routine practice compared with RCTs, there is both a lack of adherence to and undertreatment with anti-VEGF therapy, including nonpersistence, whereby patients do not continue therapy in the medium-to-long term.2

This remains a significant barrier to optimizing real-world outcomes for patients with chronic, progressive retinal conditions, such as neovascular age-related macular degeneration (nAMD). Certainly, more RWE for effective strategies that can be employed at a clinic/practice level to improve adherence and persistence to anti-VEGF agents would be welcomed.

In addition, there are inherent limitations to data collection in real-world clinical practice; as a result, RWE may vary in quality.13 This is partly because of heterogeneity in data gained from different sources and variability in both the application of RWE methodologies and the interpretation of the resulting evidence. Furthermore, depending on the RWE source, data may be inconsistently collected, misclassified, or missed. This is known as information bias.

A recent systematic review analyzed 64 real-world ophthalmology data sources from 16 countries for completeness of data relating to different outcomes, and only 10 scored highly.14 Most of these sources provided information on baseline status, clinical outcomes, and treatment, but few collected data on economic and patient-reported burden.

Another issue within ophthalmology is how the treatment regimens are described, particularly for anti-VEGF agents. Intravitreal aflibercept, brolucizumab, pegaptanib, and ranibizumab have indications for retinal diseases. However, in some European countries, bevacizumab is used and reimbursed off-label, despite jurisdictional ambiguity and, often, a legal risk for prescribing physicians.15

Sometimes there is a lack of clarity as to whether patients were treated with fixed dosing, pro re nata therapy, or treat-and-extend dosing, as well as the degree of adherence to those schedules.14 This makes understanding treatment effectiveness and comparing studies particularly challenging.

Although information bias may be one of the easiest types to identify and quantify, RWE is also prone to other biases. Therapies may be differently prescribed depending on patient and disease characteristics, leading to selection and channelling biases (for example, older patients or those with more advanced disease tending to receive one therapy over another).

In addition, patients or caregivers may be more likely to report only the most recent or impactful events, leading to recall bias. In some cases, events are more likely to be captured in one treatment group than another, resulting in detection biases.16 It is important that such limitations are recognized and RWE is interpreted in this context.

Introducing a novel RWE quality assessment tool

Throughout medical school and early in their medical careers, clinicians are taught how to assess the robustness of clinical studies, learning about randomization, methods of blinding, and different types of controls.17 It is similarly important to understand the quality of RWE.

RWE is becoming an increasingly important component of the overall evidence-based treatment of retinal diseases. However, clear guidance on how to assess the rigor of real-world studies and the conclusions and recommendations they generate in the field of ophthalmology is lacking.

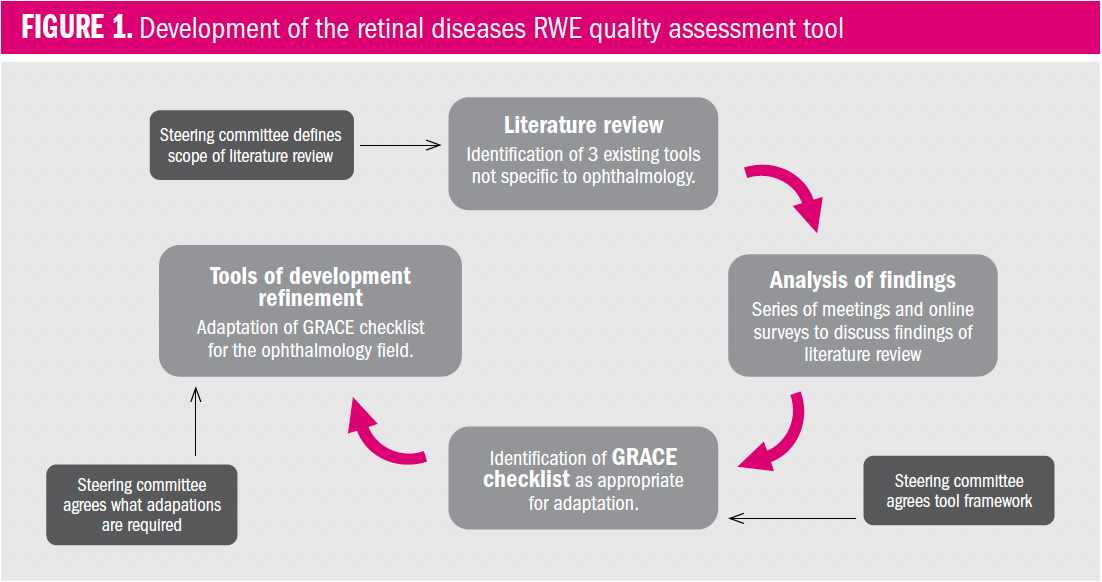

Abbreviations: GRACE, Good Research for Comparative Effectiveness; RWE, real-world evidence.

As shown in Figure 1, an RWE steering committee (a coalition of leading retinal specialists and methodological experts) recently developed a user-friendly framework assessing the quality of available RWE for retinal diseases, including nAMD, diabetic macular oedema, and retinal vascular occlusion.13 The goal was to assist ophthalmologists in independently drawing relevant and reliable conclusions from RWE and understanding its applicability to their practice.

Building on a validated framework

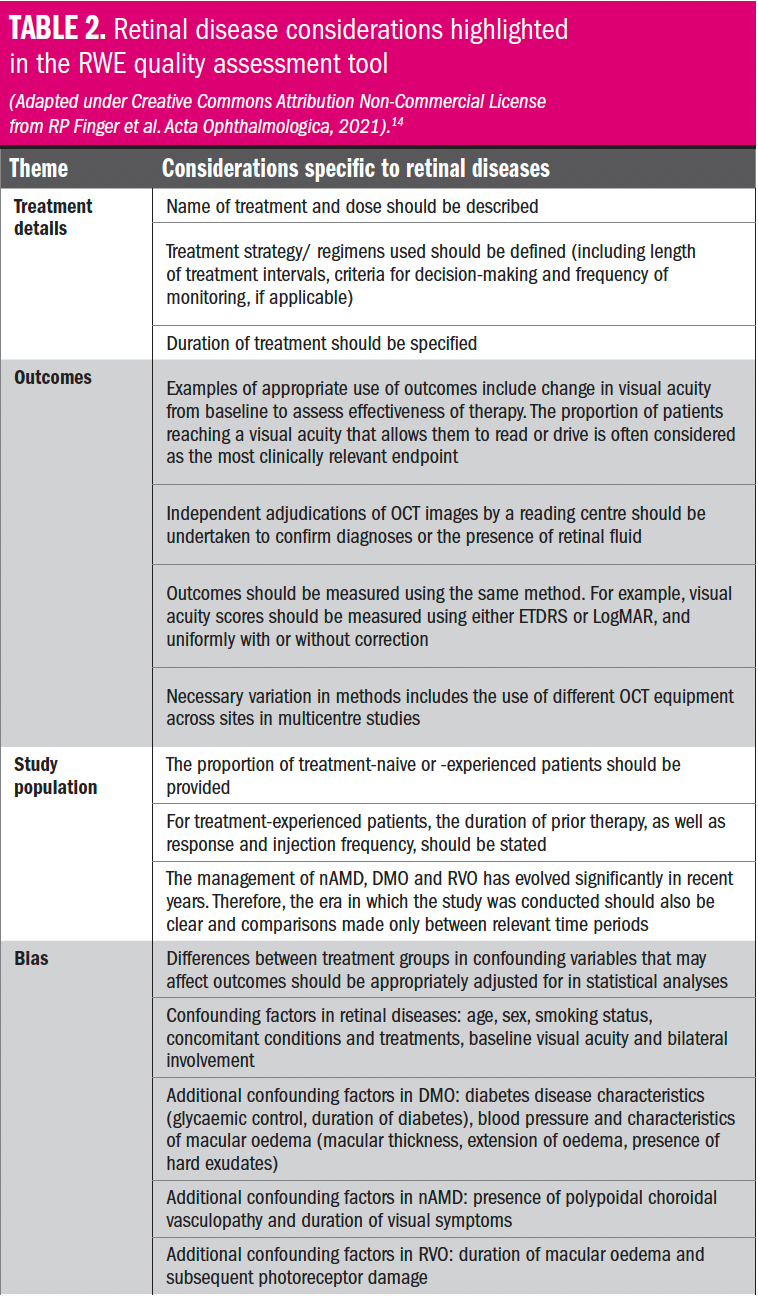

Abbreviations: DMO, diabetic macular oedema; ETDRS, Early Treatment Diabetic Retinopathy Study; nAMD, neovascular age-related macular degeneration; OCT, optical coherence tomography; RVO, retinal vascular occlusion.

The Good Research for Comparative Effectiveness (GRACE) checklist was selected as the basis of the RWE quality assessment tool. This is an 11-item screening tool that evaluates methodology and reporting to identify high-quality observational comparative effectiveness research.18 The checklist has been extensively validated, has demonstrated strong sensitivity and specificity, and can be successfully applied by a wide variety of users with different training backgrounds.

Although the checklist was developed specifically for comparative effectiveness research, many of the items were also considered applicable to noncomparative studies. It was adapted for the retinal diseases field by omitting items that were not considered relevant to a practical, clinically focused ophthalmology audience, and adapting or combining some items for easier application.

Considerations when assessing quality of RWE

The adaptation of the GRACE checklist to be more specific to and relevant for ophthalmologists has resulted in the development of the retinal diseases RWE quality assessment tool.

This addresses treatment details, how outcomes were assessed/quantified, descriptors of the study population, and possible sources of bias. More details and examples of what to consider can be found in the full publication.13

The purpose of the tool is to specifically assess the quality of RWE generated by a specific study in a population of patients with retinal disease. Caution should be exercised when comparing RWE across different retinal disease studies because of the heterogeneity mentioned previously regarding the different methodologies employed, as well as heterogeneity in patient populations, disease characteristics, and treatment regimens.

Conclusions

RWE is growing in importance as a source of information that can help support clinical decision-making when evaluated properly. Regulators also increasingly accept that RWE may be needed in appraising postmarketing value. The retinal diseases RWE quality assessment tool can help clinicians understand which findings from real-world studies are most robust and applicable to their practice.